Real-time ASR Providers WER and Latency

At Vocinity, we connect to many real-time ASR providers as part of providing our Voice / Video bot solutions. Each provider loves to say they are the best, but how do they really stack up? There are many aspects of choosing a real-time ASR, but for this test, we focused on Word Error Rate (WER) accuracy and latency. Testing latency is relatively easy, but WER is much more challenging. When a user says “forty two”, one ASR may respond with “42”, while another may respond with “forty two” and another “forty-two”. The utterance “okay” may return “OK” back from one ASR and “okay” from another. These differences were corrected in the WER, as well as capitalization and punctuation, so that differences did not count against the ground truth.

Most of the ASR testing datasets are on relatively clean data, for this test we used real-world samples many with noise and hard-to-understand accents. The sample set comprised 30% 8 kHz narrowband and 70% 16 kHz wideband .wav files. Real-time transcription API was used with all providers, with test .wav files being streamed to each provider in 100ms chunks.

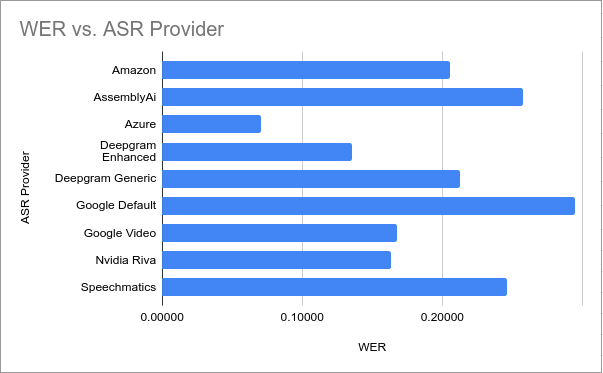

As you can see from the results Microsoft Azure has the highest accuracy with the lowest WER. It was surprising how much better Microsoft was over Google in the WER category.

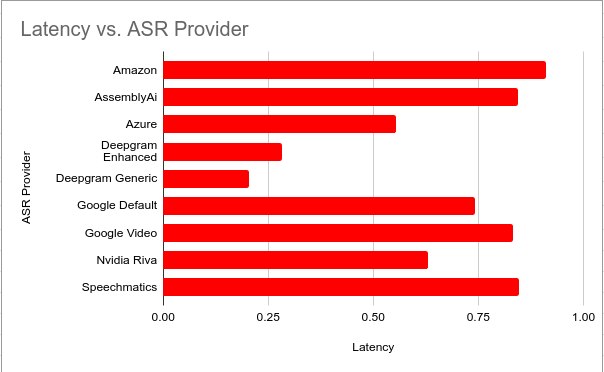

On the latency front, Deepgram was the undisputed king, coming in at less than half the latency of Azure with Enhanced and even better with Generic.